app開發(fā)定制開發(fā)合肥seo網(wǎng)站排名

神經(jīng)網(wǎng)絡-非線性激活

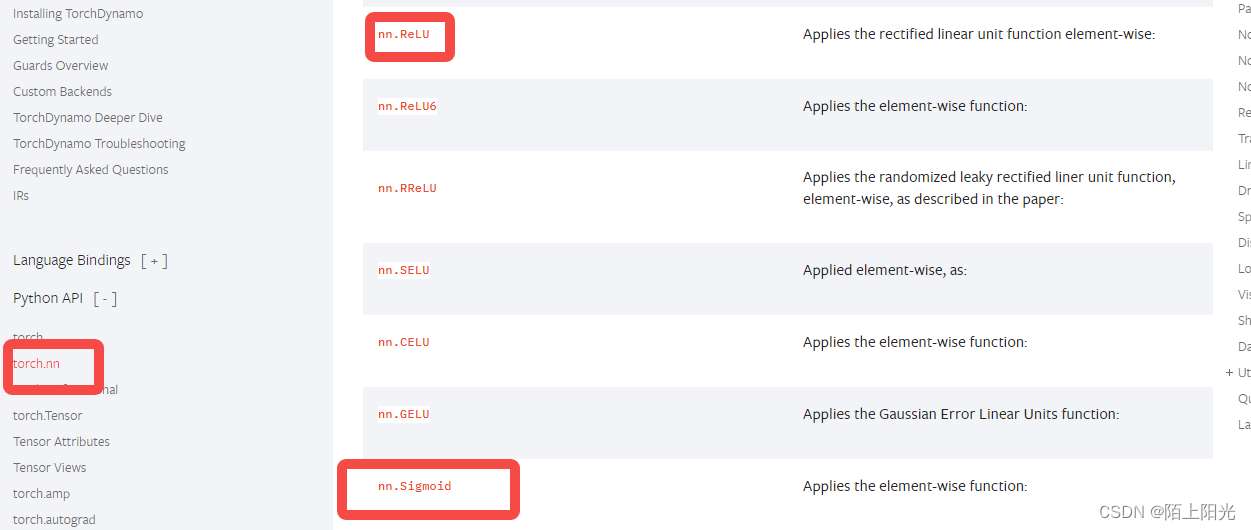

- 官網(wǎng)文檔

- 常用1 ReLU

- inplace

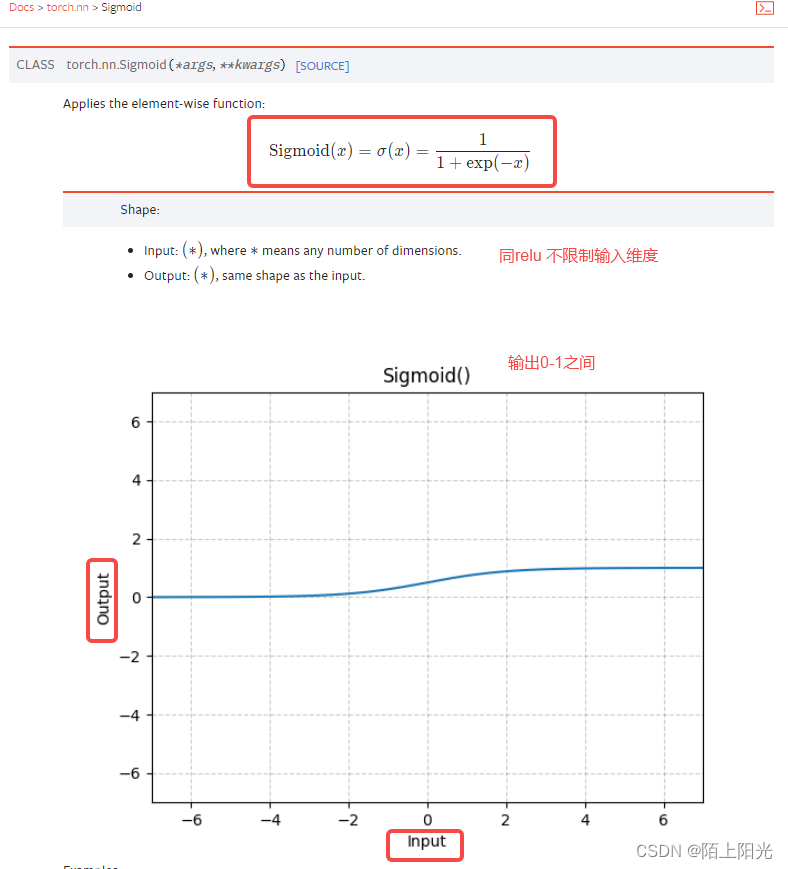

- 常用2 Sigmoid

- 代碼

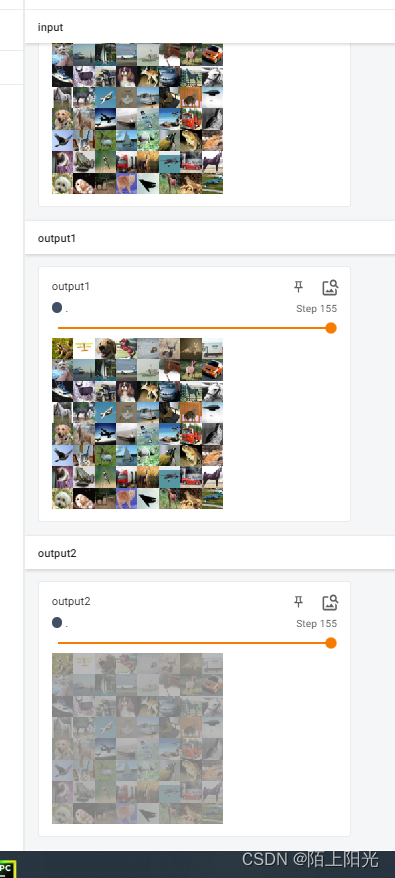

- logs

B站小土堆學習pytorch視頻 非常棒的up主,講的很詳細明白

官網(wǎng)文檔

https://pytorch.org/docs/stable/nn.html#non-linear-activations-weighted-sum-nonlinearity

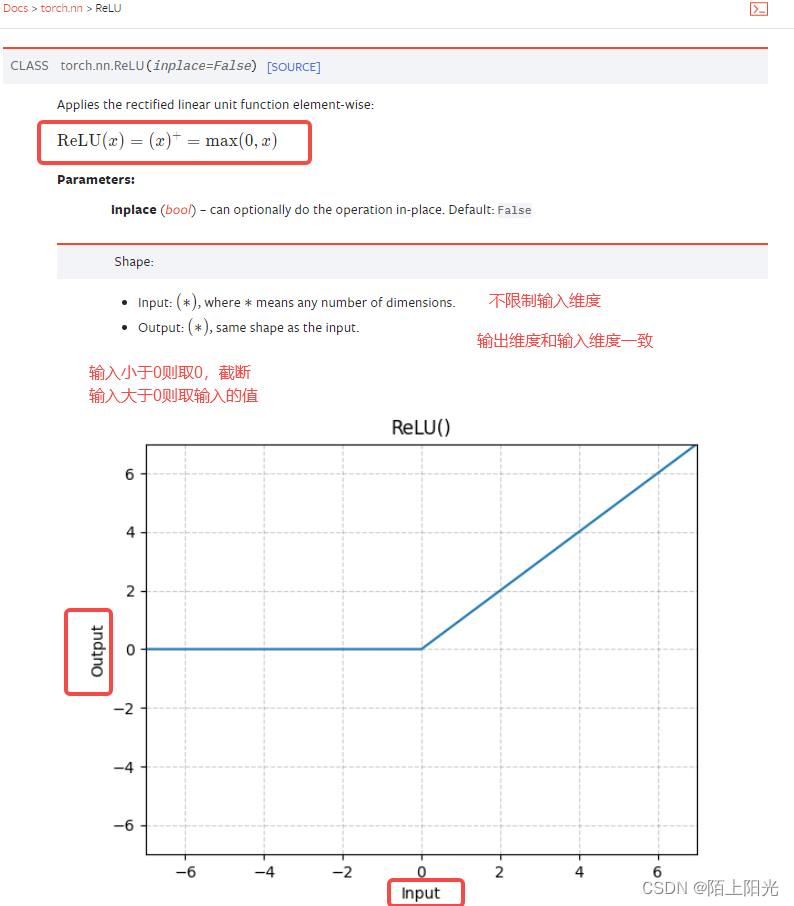

常用1 ReLU

對輸入做截斷非線性處理,使模型泛化

>>> m = nn.ReLU()

>>> input = torch.randn(2)

>>> output = m(input)

An implementation of CReLU - https://arxiv.org/abs/1603.05201

>>> m = nn.ReLU()

>>> input = torch.randn(2).unsqueeze(0)

>>> output = torch.cat((m(input), m(-input)))

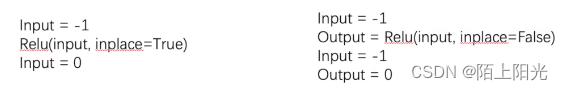

inplace

inplace=True 原位操作 改變變量本身的值

inplace=False 重新定義一個變量output 承接input-relu后的值,一般默認為False,保留輸入數(shù)據(jù)

常用2 Sigmoid

>>> m = nn.Sigmoid()

>>> input = torch.randn(2)

>>> output = m(input)

彈幕:

激活層的作用是放大不同類別的得分差異

二分類輸出層用sigmoid 隱藏層用relu

負值的來源:輸入數(shù)據(jù);卷積核;歸一化;反向梯度下降導致負值;【不確定】

reshape(input, (-1,1,2,2))是將input這個22的張量轉化為-1122的張量,其中-1表示張量元素個數(shù)除以其他維度大小的乘積,即“-1” == 22/(12*2) = 1

非線性變化主要目的:為我們的網(wǎng)絡引入非線性特征 非線性越多才能訓練不同的非線性曲線或者說特征,模型泛化能力才好。

代碼

import torch

import torchvision.transforms

from torch import nn

from torch.nn import ReLU, Sigmoid

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

from torchvision import datasetstest_set = datasets.CIFAR10('./dataset', train=False, transform=torchvision.transforms.ToTensor(), download=True)

dataloader = DataLoader(test_set, batch_size=64, drop_last=True)class Activation(nn.Module):def __init__(self):super(Activation, self).__init__()self.relu1 = ReLU(inplace=False)self.sigmoid1 = Sigmoid()def forward(self, input):# output1 = self.relu1(input)output2 = self.sigmoid1(input)# return output1return output2writer = SummaryWriter('logs')

step = 0

activate = Activation()

for data in dataloader:imgs, target = datawriter.add_images("input", imgs, global_step=step)output = activate(imgs)# writer.add_images("output1", output, global_step=step)writer.add_images("output2", output, global_step=step)step += 1

writer.close()

logs